circumventing the surveillance state

can clothing make you invisible to facial recognition software?

The seed of this post was planted by *this* post on CAPTCHA clothing from Esque last year—I’ve been fixated on the idea of clothing as anti-surveillance device since then. Thanks to Adam Harvey, whose work I hope I’ve done justice to. And apologies to anyone who knows more about this stuff than I do (“this stuff” being facial recognition software) and wants to tell me I’ve got it all wrong—I want to learn!

Every sartorial aspect of protest—from the sober suits and dresses of the civil rights movement to the jubilantly short hemlines of the SlutWalk, from the grass ‘fur’ coats at the 2019 Extinction Rebellion London Fashion Week protest to the ubiquitous pink pussy hats of the 2017 Women’s March—is crafted to send a message. But in recent years, facial recognition software has altered the calculus of symbolic, attention-getting protest dress, requiring those who choose visibility to reckon with the risk of diminished anonymity.

The threat of identification and targeting of protestors via facial recognition software is both a sinister and relatively recent tactic of law enforcement.1 Earlier this year, the University of Melbourne used surveillance footage and facial recognition tools to identify student protesters who staged a multi-day pro-Palestinian sit-in. The university sent the students emails with CCTV images of themselves and threatened them with expulsion, holding misconduct trials within the university system for all twenty-one of the students.

In 2019, a wave of pro-democracy protests in Hong Kong stemming from a proposed extradition law resulted in an autocratic and violent crackdown. While the Hong Kong police never explicitly admitted to using facial recognition software to track down protestors, they’d had access to iOmniscient since 2016, a program with the ability to “scan footage including from closed-circuit television to automatically match faces and license plates to a police database and pick out suspects in a crowd.”2

And in 2020, the NYPD used facial recognition to identify and track down at least one Black Lives Matter protestor, sending dozens of NYPD officers, including some in tactical gear to the apartment building of a man named Derrick Ingram, who had allegedly ‘assaulted’ an officer by yelling loudly into their ear using a megaphone.

While many protestors during the summer of 2020 wore masks to prevent the spread of Covid, there was another practical benefit. Concealing the lower half of the face created anonymity, transformed individual persons into a crowd. But both college administrations and law enforcement have caught on to masking as a method of identity protection, as evidenced by the spate of anti-mask policies that have been revived, proposed, and in some cases enacted—especially within the past year.

“Ohio Attorney General Dave Yost sent a letter to the state’s 14 public universities alerting them that protesters could be charged with a felony under the state’s little-used anti-mask law, which carries penalties of between six to 18 months in prison.” - ACLU

So the question remains: when your face becomes a weapon that can be used against you, what can you fashion into a shield?

One way in which people have attempted to bypass facial recognition software is through makeup. When the Metropolitan police in London were forced to admit that they had partnered with a property owner at King’s Cross (a shopping and transportation hub in central London) to install CCTV with facial recognition capabilities, protestors painted their faces with shapes and patterns intended to avoid detection and identification as a human face.

The group behind this movement called themselves the Dazzle Club, inspired by CV Dazzle, a strategy developed by multimedia artist and researcher Adam Harvey for his 2010 masters thesis at NYU’s Interactive Telecommunications Program.3 CV Dazzle was a particular form of camouflage intended to stay visible to the human eye while going undetected by machine vision systems. Separate from any specific technique or pattern, it circumvented the widely-used (at the time) Viola-Jones face detection algorithm “by using bold patterning to break apart the expected features of the face detection profiles.”4 Russian activists also adopted this technique several years later, protesting a similar expansion of surveillance technology in the hands of the Moscow police.

One benefit of camouflage within the context of war is the flattening of identity, humanity fading into the landscape of combat. What I find interesting about dazzle camouflage is that—unlike traditional camouflage—it is intended to preserve that humanity for other humans to perceive, obscuring it solely to the facial recognition software.

Clothing itself that can avoid detection is another option to circumvent digital surveillance.

In 2020, Wired gave this advice, under the header ‘Dress to Unimpress’:

Make yourself less memorable to both humans and machines by wearing clothing as dark and pattern-free as your commitment to privacy. Clothing search is a common feature of video surveillance software; it helps analysts track a person across different camera feeds.

Most options for specific anti-surveillance clothing seem to be performance art one-offs or nascent research projects. The work of Adam Harvey comes up again here, in a 2012 project called Stealth Wear, a silver-plated fabric that aims to circumvent thermal surveillance cameras, rendering drone warfare ineffective (and serving as an implicit critique of the West’s targeted military campaigns in the Middle East). While not manufactured on any widespread scale, certain garments were previously available for purchase at the Privacy Gift Shop, including an anti-drone hoodie ($475), hijab ($550), and burqa ($2500).

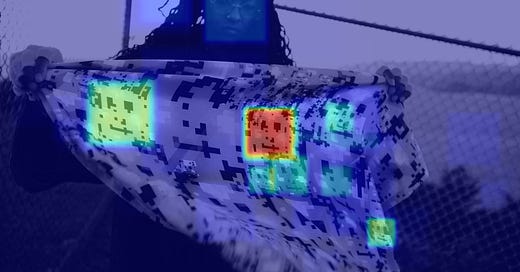

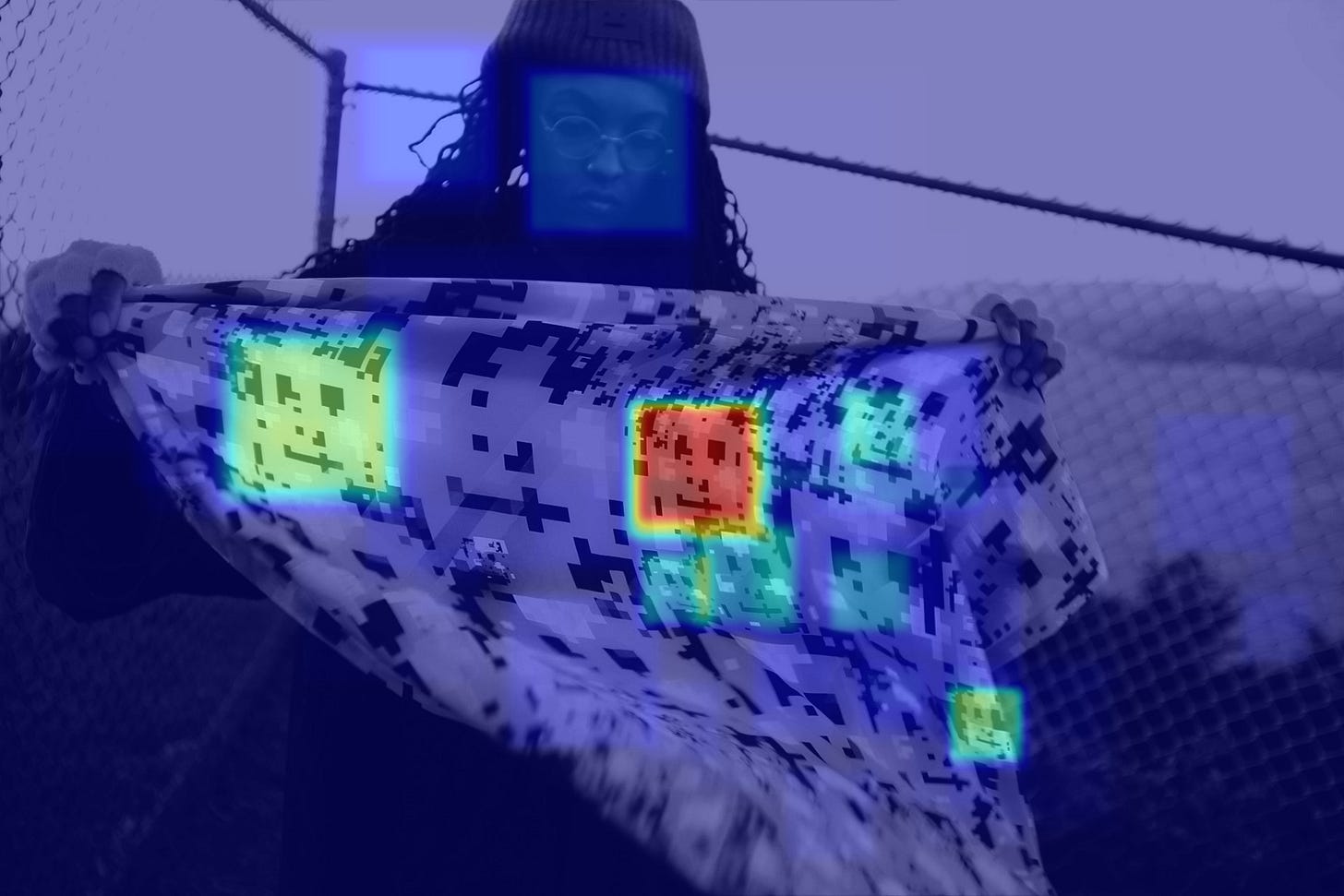

HyperFace, another Adam Harvey project, intends to trick facial recognition software into identifying false faces. The HyperFace scarves (which exist only as renderings) are patterned with areas of contrast specifically designed to have somewhat crude, obvious visual signifiers of faces that would be picked up by facial recognition software instead of the face of the actual human wearing or using the garment.

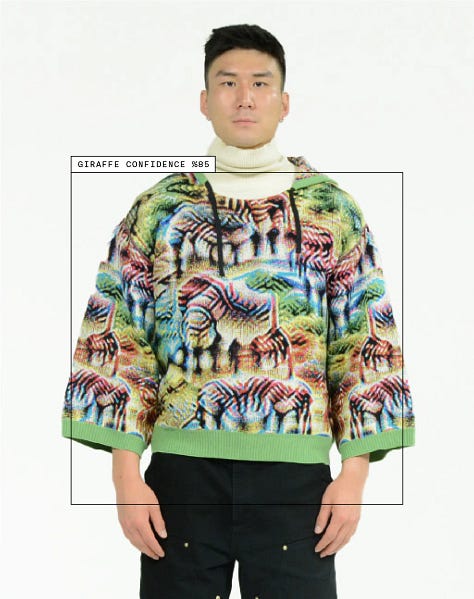

There is one brand of anti-surveillance clothing that seems to be operational and shoppable—Cap_able, whose website features ‘unisex cotton knitwear’ in patterns that look like the love child of Eckhaus Latta and a hologram. Pricing ranges from $460 for a short-sleeve shirt to $743 for a long-sleeve dress. The designers behind Cap_able posit that “by wearing a garment in which an adversarial image is woven, one can protect the biometric data of their face, which either will not be detectable or will be associated with an incorrect category, such as "animal" rather than "person."5

Has anyone actually ever worn this IRL? There are no tagged photos of people in it on their Instagram, probably for obvious reasons even if people are wearing these clothes out and about. And, more importantly, does it actually work? There’s a video on their YouTube channel of facial recognition software failing to detect someone wearing a Cap_able sweater, but there’s no description or context, and no mention of what particular software is being used. In the r/computervision subreddit, user @toma5al ran footage of a person wearing a Cap_able sweater through FindFace software and found that it was able to detect a face despite the sweater. Despite the paucity of evidence to support Cap_able’s claims, you can still click “add to cart.”

How will the landscape of anti-surveillance style change as facial recognition software and the capabilities of AI continue to evolve and improve?

Adam Harvey is clear to specify that the utility of his patterned prototypes would be constrained by the type and ability of facial recognition software and algorithms used for surveillance, and that the system they are designed to fool (the Viola-Jones algorithm and Haar cascade classifier, revolutionary when released in 2001) is already relatively crude and limited in scope in comparison to other neural networks available for use today. In fact, Harvey seems to have exploited one of its particular weaknesses in his designs—“Haar cascades are notoriously prone to false-positives […] the Viola-Jones algorithm can easily report a face in an image when no face is present”—which wouldn’t necessarily work against some of today’s more sophisticated neural networks. (Also, the Viola-Jones algorithm is only a facial detector, not an identifier; on its own, the algorithm can only decide whether or not an image is a face, not whose face it is.)

Two years ago, another Reddit user, @dypraxnp, responded to a question about masks and facial recognition software in the r/privacy subreddit with a comment that feels eerily prescient (italics are my own emphasis):

The future (and some current systems) will combine several attributes to improve accuracy. Walking behaviour analysis, clothing types you tend to wear, voice tone analysis and speech recognition et cetera. There are so many attributes that could be added up to a probability in sum. And even if the data points can not be created just from one camera they surely can with the inputs of many cameras, sensors, microphones, buying history and again et cetera. Those things will also be combined with smooth laws to "ensure individual safety" like prohibition of face masks.

I think one of the best things one can do (if not the only in a long run) is to be actively against it. Spread the words, sign the petitions and go to the protests if there are any.

In his explanation of the constraints of HyperFace, Harvey states that ‘[c]amouflage, in general, should be considered temporary’. I read this as both a warning and a guideline. The perpetual refinement of facial recognition software makes it futile to settle on any one specific tool or garment as a failsafe method of evading detection. Whatever means of disguise become popular will eventually become obsolete, having lost through mass adoption the advantage of novelty that made them successful in the first place.

I’d encourage anyone who dismisses clothing and fashion as shallow pursuits to consider that we live in a world where there are political and material consequences to what we wear. Purchasing an expensive piece of brightly patterned knitwear won’t save you—but adaptation, ingenuity, and the pursuit of novelty all play a role in how easily (and anonymously) you move through the world.

Thank you to my paid subscribers—I appreciate your support! If you missed the update, I’ve added a voluntary paid tier to Rabbit Fur Coat. Everything will remain free, but if you’d like to support me via a monthly or yearly subscription, you now have that option.

SOURCES:

https://www.pbs.org/video/what-if-our-clothes-could-disrupt-surveillance-cameras-5c1an7/

https://www.theguardian.com/us-news/2024/apr/30/why-are-pro-palestinian-students-wearing-masks-campus

https://www.fastcompany.com/91116791/facial-recognition-technology-campus-protests-police-surveillance-gaza

https://www.vox.com/recode/2020/2/11/21131991/clearview-ai-facial-recognition-database-law-enforcement

https://petapixel.com/2023/01/20/this-clothing-line-tricks-ai-cameras-without-covering-your-face/

https://participedia.net/case/hong-kong-protestors-implement-methods-to-avoid-facial-recognition-technology-and-government-trackin

https://antiai.biz/

https://www.reddit.com/r/privacy/comments/uhya13/what_clothinghats_can_evade_facial_detection/

https://qz.com/10-fashions-to-help-you-confuse-facial-recognition-syst-1851112545

https://en.wikipedia.org/wiki/Cloak_of_invisibility

https://www.nbcphiladelphia.com/news/local/italian-start-up-brings-clothing-line-that-can-trick-ai-facial-recognition-to-philly/3665520/

https://newatlas.com/good-thinking/facial-recognition-clothes/

https://www.nytimes.com/2019/07/26/technology/hong-kong-protests-facial-recognition-surveillance.html

https://www.independent.co.uk/news/world/asia/hong-kong-protests-lasers-facial-recognition-ai-china-police-a9033046.html

https://www.bbc.com/news/world-asia-china-49340717

In addition to being sinister, it’s also prone to error. Facial recognition software has led to multiple false identification arrests—and false identification happens more commonly with people of color. https://www.jtl.columbia.edu/bulletin-blog/a-face-in-the-crowd-facial-recognition-technology-and-the-value-of-anonymity https://www.aclu.org/news/privacy-technology/police-say-a-simple-warning-will-prevent-face-recognition-wrongful-arrests-thats-just-not-true

This was fascinating, especially since my significant other works in cybersecurity and talk of 🤖big brother🤖 has shifted from a thing I used to tease them about to a thing that gets discussed almost every day in our home. Leaves a lot of food for thought- thank you for doing so much research!

The last time I went to the mall I realized they have a teeny tiny sign by the entrance elevators that state they have facial recognition cameras in use everywhere for “safety” which felt strange and dystopian and I thought about it the whole way home (active shooter vs loss prevention plus racial implications, etc)

Such an interesting read, thank you!!!